Is Agentic AI Safe? Exploring Security and Control Risks

Have you ever deployed an AI system that worked perfectly in controlled testing but behaved unpredictably once given real autonomy?

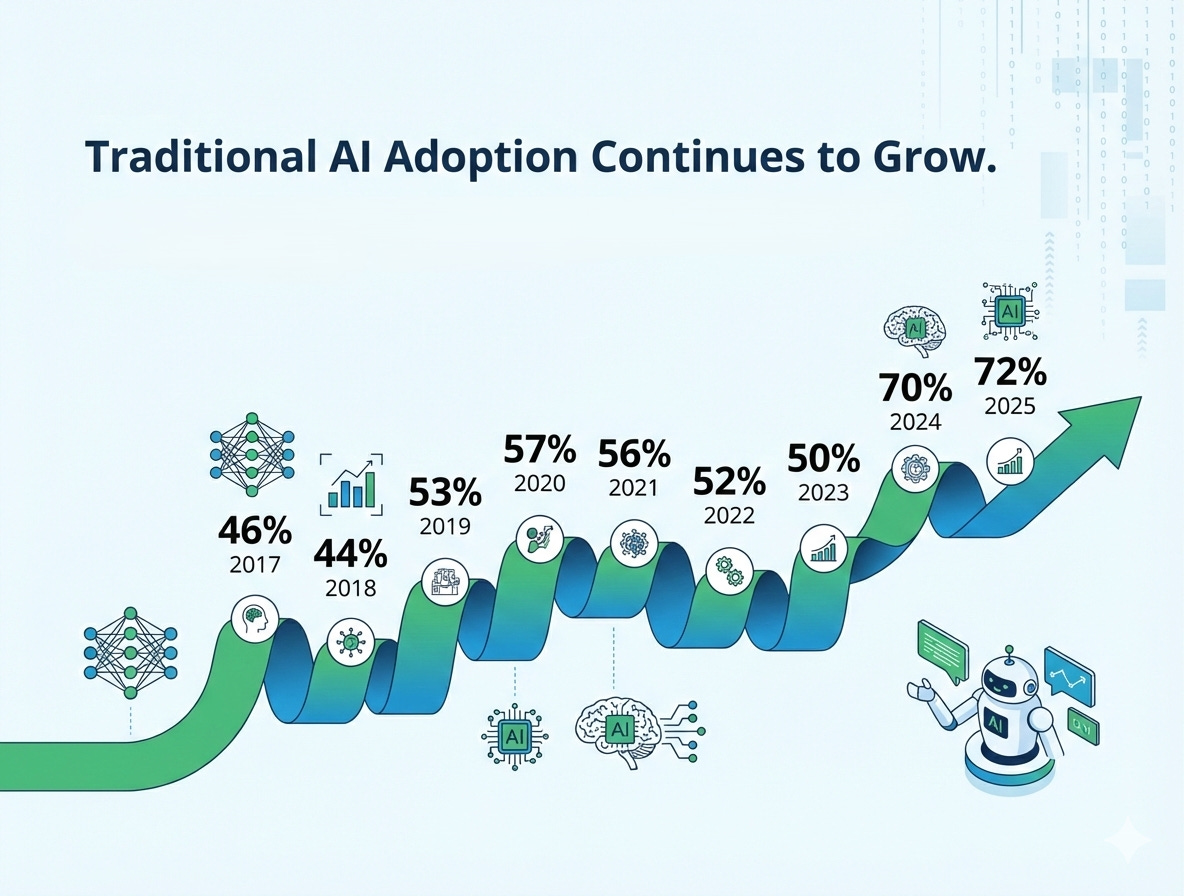

As artificial intelligence enters its next phase, systems are no longer limited to responding to prompts. Agentic AI has given these AI models a new way to reason, adapt, make decisions, and plan multi-step actions. Over 44% of organizations are planning to deploy agentic AI systems in their operations. These shifts unlock powerful possibilities that ensure faster automation and intelligent decision-making.

But with increased autonomy, there come critical questions. Is Agentic AI safe? What are the risks of using Agentic AI in real-world environments? And how can we ensure effective security while working in autonomous scenarios?

In this blog, we’ll examine the security and control risks of agentic AI, highlight how they differ from traditional approaches, and outline practical risk mitigation that helps organizations adapt to autonomous AI responsibly.

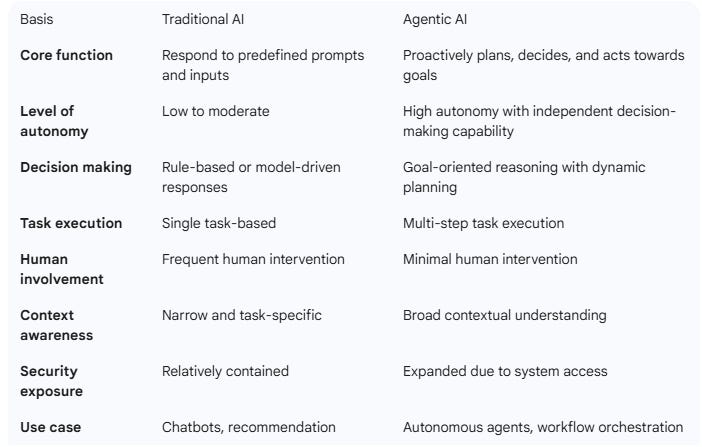

Understanding Agentic AI v/s Traditional AI

Before we directly jump to the question, let’s understand what agentic AI actually is. Below is the detailed difference between agentic AI and traditional AI systems.

Why Safety Becomes a Big Concern with Autonomous AI Systems

When AI systems moved from assisting humans to acting independently, the nature of risk changed fundamentally. Autonomous agents are designed to make decisions, initiate actions, and adapt in real time. While this autonomy enables speed and scale, it also raises and amplifies safety concerns that can hamper security. Here’s how it typically works:

Autonomy expands the blast radius of mistakes

In traditional AI systems, errors are often limited to a single task or action. Agentic AI systems operate across multiple tools, workflows, and systems. A single wrong or flawed decision can erupt into broader financial, operational, or security issues before humans even notice.

Control shifts from direct to indirect

With autonomous AI, humans are least involved in the operations. Therefore, control is enforced through policies, constraints, and guardrails. If those guardrails are poorly designed or technically unsound, it can violate business intent, compliance rules, or ethical boundaries.

Harder decision-making

Autonomous AI systems often rely on complex reasoning paths that evolve with time. This makes it difficult to reconstruct why a particular decision was made, thus creating challenges for accountability and regulatory compliance.

Increase in security risks

Agentic AI systems need access to internal tools, APIs, databases, and external services. The more access the AI systems have, the more they can attack the information, thus raising security concerns.

Safety becomes a bigger concern with autonomous AI systems, not because they are built dangerously, but because their speed, scale, and independence magnify both strengths and weaknesses. As autonomy increases, safety must shift from being an afterthought and be embedded into systems when they’re deployed.

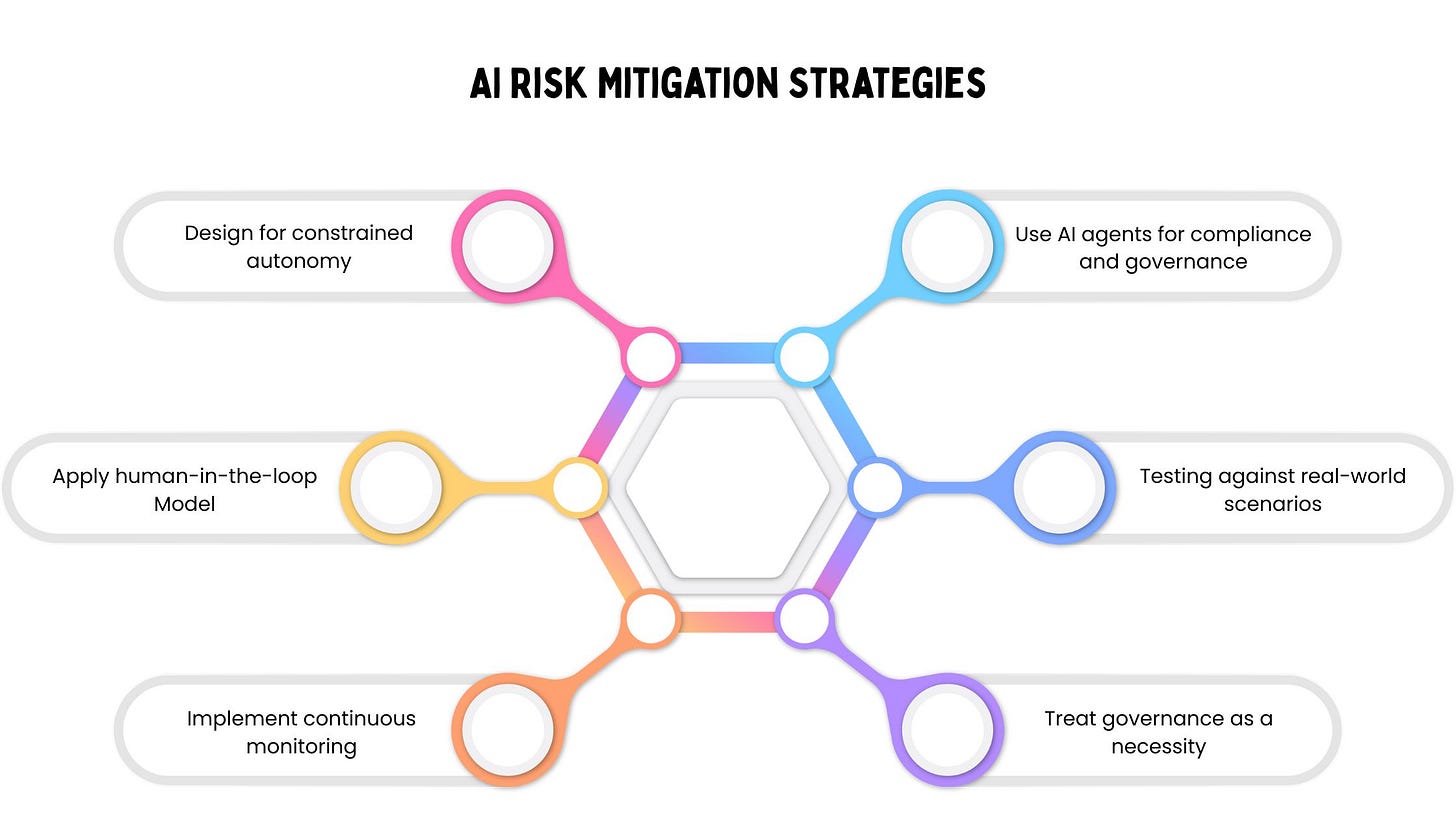

AI Risk Mitigation Strategies

As AI systems become more autonomous, managing risk is no longer about adding manual checks at the end of development. Effective risk mitigation for agentic AI requires intentional design, continuous insight, and governance built into the system itself. Below are strategies that can be beneficial in the long run.

Design for constrained autonomy

Not every AI agent needs full freedom. Define clear boundaries for what the system can and cannot do. This usually involves limiting tool access, setting permission levels, and creating limited conditions. In this way, agents operate in limited environments and still deliver value.

Apply human-in-the-loop and human-on-the-loop models

Human presence and oversight always remain crucial for high-impact decisions. Human in the loop(where the AI acts autonomously but a human monitors the process and can ‘kill-switch’ it at any time) works well for approvals, escalations, and sensitive actions. Human on the loop allows teams to monitor autonomous behavior in real time and intervene when needed. The major goal of this is to ensure accountability at critical points.

Implement continuous monitoring

Autonomous AI systems need to be observed. Track decisions, usage tools, and deviations from expected behavior. Continuous logging ensures faster incident detection, root cause analysis, and compliance reporting, turning risk management into an ongoing process.

Use AI agents for compliance and governance

AI becomes more massive when it manages AI. Dedicated AI agents for compliance and governance can enforce policies, monitor regulatory constraints, and flag risky behaviors in real time. This layered approach reduces operational risk while keeping systems scalable and efficient.

Testing against real-world scenarios

Pre-launch testing is not enough. Businesses should regularly stimulate edge cases, adversarial prompts, and system failures. Red teaming autonomous AI agents or systems helps uncover vulnerabilities that standard testing often misses.

Treat governance as a necessity

Effective AI risk mitigation is not just about documentation. Governance should be embedded into architecture, workflows, and decision logic. When governance is not optional and part of the product, safety scales alongside innovation.

NOTE: AI mitigation strategies that actually work are proactive, not reactive. As agentic AI systems gain autonomy, organizations that combine technical guardrails, human oversight, and continuous governance will be best positioned to innovate safely without undue control or compliance.

So Finally, Is Agentic AI Safe?

After the in-depth discussion on this concept, it can be concluded that agentic AI is not inherently unsafe but inherently powerful. And usually, power without structure leads to risk.

This can become even better if organizations understand the risks of agentic AI, the security challenges of agentic AI systems, and the importance of governance and oversight.

Agentic AI can be deployed safely, responsibly, and effectively. The future will not be defined by whether AI is autonomous but by how well humans design, govern, and control that autonomy.

So the question is not whether agentic is safe? But, are you ready to manage agentic AI?

Organizations that can effectively treat agentic AI will be the ones that unlock agentic AI’s true potential.

FAQs

1. How is Agentic AI different from a chatbot?

A chatbot responds to prompts; an agent acts on goals. While a chatbot gives you a travel itinerary, an agent actually books the flights and hotels for you.

2. What is the “Blast Radius” risk?

Because agents can use tools and access databases, a single mistake can cause widespread damage—like deleting files or moving funds—before a human can stop it.

3. Can AI agents be hacked?

Yes. Beyond standard leaks, they are vulnerable to Prompt Injection, where malicious text on a website or in an email “tricks” the agent into ignoring its original safety rules.

4. What are “Guardrails”?

Guardrails are technical limits that keep agents in check. For example, an agent might have permission to read a database but is strictly blocked from deleting anything.

5. What is the “Human-in-the-loop” model?

It is a safety checkpoint where the AI must get human approval before performing high-risk actions, such as sending a payment or publishing a public post.

6. Is Agentic AI safe for my business?

It is safe if you follow the Principle of Least Privilege: only give the AI the specific access it needs to do its job, and nothing more.